Wazzup Pilipinas!

The Department of Transportation (DOTr) yesterday served the order to dismiss from the service Atty. Jesus Eduardo Natividad, Regional Director in the Cordillera Administrative Region (DOTr-CAR), and Datu Mohammad Abbas, Assistant Regional Director of the DOTr-CAR, after being...

Friday, August 24, 2018

Why Your SEO Strategy Must Recognize The Impact Of Machine Learning On Google

Wazzup Pilipinas!

With Google pouring in billions of dollars in machine learning and artificial intelligence, it is pretty evident that the SEO landscape is set to undergo a radical transformation very soon. Some areas that will undergo major upheavals:

Content

Even though content has already...

Remote DBA Expert Understands The Importance Of Choosing Database For Analytics

Wazzup Pilipinas!

Whenever the analytics questions run into edges of latest tools, it is time to head towards database for analytics. It is not a clever idea to construct scripts to query production database. Chances are high that you might delete vital info accidentally if you have engineers or...

Subscribe to:

Posts (Atom)

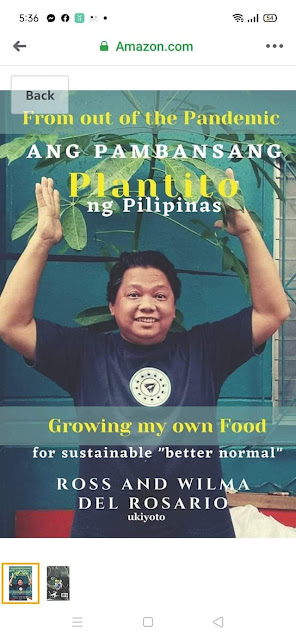

Ang Pambansang Blog ng Pilipinas Wazzup Pilipinas and the Umalohokans.

Ang Pambansang Blog ng Pilipinas celebrating 10th year of online presence

Ross is known as the Pambansang Blogger ng Pilipinas - An Information and Communication Technology (ICT) Professional by profession and a Social Media Evangelist by heart.

Ross is known as the Pambansang Blogger ng Pilipinas - An Information and Communication Technology (ICT) Professional by profession and a Social Media Evangelist by heart.

.jpg)