Wazzup Pilipinas!

Whenever the analytics questions run into edges of latest tools, it is time to head towards database for analytics. It is not a clever idea to construct scripts to query production database. Chances are high that you might delete vital info accidentally if you have engineers or analysts poking around over there. At that point, you need separate form of database for analysis. Which is the right one to choose?

While working with lots of customers to get DB up, the most interesting criteria are the amount of data you possess, type of data to analyze, focus of your engineering team and how fat you need it.

Types of data for analyzing:

Check out for the data you are planning to analyze. Will it fit well into columns and rows or would it be more appropriate into Word Doc? When it comes to Excel, relational database like MySQL, Postgres, Amazon Redshift or even BigQuery might be the ones to consider.

These are relational structured databases, which are amazing when you are aware of the data to receive and how it might links together. It basically works on ways in which rows and columns relate.

For major user analysis, relational database will be the perfect one for you. Some user traits like emails, names and billing plans will get nicely into table just like user events and the significant properties.

In case, the data fits better on paper, you might have to check for non-relational database or NoSQL like Mongo or Hadoop.

The non-relational ones work well with larger data points of semi-structured plans. Some classic examples of semi-structured data are social media, email, books, audio and visual aids, and even geographical data.

In case you are working on larger amount of text mining, image or language processing, you may have to visit non-relational data stores. You can get information on that from RemoteDBA.com now.

The amount of data to work with:

You need to know the amount of data you are planning to get hands on. The more amount you have, the non-relational database will be the most helpful option for that. NoSQL is not going to impose any kind of restraints on incoming data, which will definitely allow you to write a lot faster.

For <1TB, you can use Postgres MySQL. Then you have Amazon Aurora for 2TB – 64TB. Amazon Redshift and GoogleBigQuery are the major databases for 64TB to 2PB. And lastly you have Hadoop for covering all the data sizes as mentioned right now.

Well, there is no strict limitation as each database can handle an amount depending on multiple factors. For under 1TB data, Postgres helps in offering a good performance ratio price. But it might slow down around 6TB.

In case, you are looking for MySQL but with a bit more upscale, you have Aurora, which can go up to 64TB. In case of petabyte scale, Amazon Redshift is the best bet as it can be well-optimized for running analytics for up to 2PB.

In case, you are looking for parallel processing or more MOAR data, you can always try out for Hadoop.

The focal center of your engineering team:

Another important question asked by experts from RemoteDBA.com during database discussion is to check on the engineering team and its focus center. The smaller this team might get, the higher are your chances to need engineering focusing on building product and not on database management and pipelines. The number of people dedicated to work on your project will affect your options more.

With engineering resources, you have vast array of choices. You can either opt for non-relational or relational database. Relational DBs are likely to take less time for managing when compared to NoSQL.

If you have engineers working on setup but cannot find any help with the maintenance, you can focus on using Google SQL, Postgres or even Segment Warehouse any day over Aurora, Redshift or BigQuery.

When you have enough time in hand for maintenance, then you can select BigQuery or Redshift for faster queries at larger scale.

Relational database covers another advantage. SQL can be used for query. SQL is known among engineers and analysts and quite easier to learn when compared to other programing languages.

Moreover, running analytics on semi-structured data will require object oriented programming based background or a code based science background.

With some recent inventions of analytics tools like Hunk or Slamdata, analyzing such database types will always need help of an advanced data scientist or analyst.

How fast you are in need of data:

Even though real time analytics is quite popular nowadays for covering cases like system monitoring and fraud detection, moist analyses will not even need real time data or even immediate insights. Whenever you are answering questions like what causes users to churn or how people are currently moving from app to website, accessing data with slight lag is more or less ok. Your data won’t change every minute.

So, if you are planning to deal with after-the-fact analysis, you have to go for database as designed under hood for accommodating larger data amount and to read and join data quickly. That will make queries work faster than usual.

This step might also load data fast reasonably only if you have someone resizing, vacuuming and even monitoring the cluster.

In case you are direly in need of real-time data, you have to check for some unstructured database, such as Hadoop. You can easily design that database for loading rather quickly even when queries can take long time to scale, based on RAM usability, disk space availability and how the data has been structured.

Once you are sure of the right database to use, it is important to figure out ways to get you data involved with database. It is hard for newbies to actually build scalable data pipeline. That’s why experts are always down to offer some crucial help. It takes time to find the right team, but once available, their services are worth it.

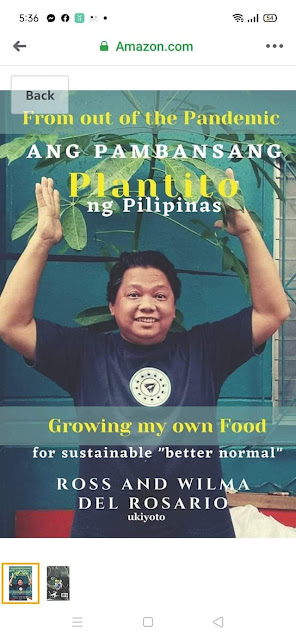

Ross is known as the Pambansang Blogger ng Pilipinas - An Information and Communication Technology (ICT) Professional by profession and a Social Media Evangelist by heart.

Ross is known as the Pambansang Blogger ng Pilipinas - An Information and Communication Technology (ICT) Professional by profession and a Social Media Evangelist by heart.